Originally published on Medium in September of 2019

Recently, we moved one of our applications from Heroku over to Amazon’s Elastic Beanstalk service, where we could exercise more granular control.

As part of this move, we decided to implement continuous deployment with CircleCI.

While most of the instructions for this process were (fairly) easily found through Google, we just had to have a few special snowflake moments:

- Our application is written in PHP, while the tutorial we liked the best was for a Node repository

- We needed to automatically create a directory on the server if it didn’t already exist

In this article, I’ll outline the steps we followed, linking to the resources we used.

The Totally-Awesome CircleCI / Elastic Beanstalk Guide (nope, not ours)

We were exctied to find Simon McClive’s wonderfully-detailed article Continuous Integration with CircleCI 2.0, GitHub and Elastic Beanstalk. In it, he lays out the A-to-Z process of connecting a GitHub repository to CircleCI and Elastic Beanstalk.

Thank you, Simon!

His work got us 95% of the way and undoubtedly shaved several days off of the project!

I recommend following his tutorial, customizing it just a bit based on your needs and our lessons-learned as documented below.

AWS Security

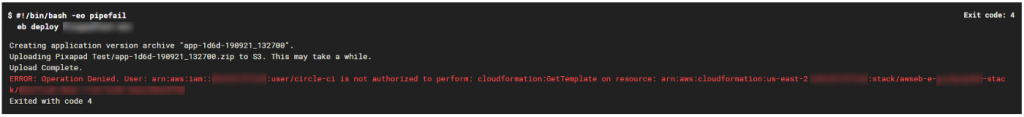

We ran into a few problems granting the circle-ci user access to Cloud Formation’s GetTemplate method, the S3 bucket, and a few other functions, despite creating the recommended policies.

While this was most likely caused by user error, a few hours of tinkering yielded no measurable progress. As a temporary work-around, we granted our IAM user the AWSElasticBeanstalkFullAccess. After we fine-tune everything, we’ll update the user’s access — and this article — with the recommended security policy, if it differs from Simon’s guidance.

CircleCI

Simon and his crew at Gradient use Node, and they were kind enough to make their Docker image publicly available.

Since our app is written in PHP, we used the circleci/php:7.2-node-browsers Docker image instead.

We also needed PDO and Composer. Here’s our full config.yml file:

version: 2

jobs:

build:

branches:

only:

- staging

working_directory: ~/build

docker:

- image: circleci/php:7.2-node-browserssteps:

- checkout- run:

name: APT Installs (ZIP, PDO, MySQL, Composer)

command: |

sudo docker-php-ext-install zip

sudo docker-php-ext-install pdo pdo_mysql

sudo apt-get install software-properties-common

sudo composer self-update- run:

name: Install Python and PIP

command: |

sudo apt-get install -y python3.7

sudo apt install -y python3-pip

sudo pip3 install --upgrade pip

sudo pip3 install --upgrade awscli

sudo pip3 install --upgrade awsebcli

- run:

name: Setup AWS credentials

command: |

mkdir ~/.aws && printf "[profile eb-cli]\naws_access_key_id = $REDACTED\naws_secret_access_key = $REDACTED" > ~/.aws/config- deploy:

name: Deploy to Elastic Beanstalk

command: |

eb deploy YOUR-ENV-NAME# Download and cache dependencies

- restore_cache:

keys:

# "composer.lock" can be used if it is committed to the repo

- v1-dependencies-{{ checksum "composer.json" }}

# fallback to using the latest cache if no exact match is found

- v1-dependencies-- restore_cache:

keys:

- node-v1-{{ checksum "package.json" }}

- node-v1-- run:

name: Install app dependencies

command: |

composer install -n --prefer-dist- save_cache:

key: v1-dependencies-{{ checksum "composer.json" }}

paths:

- ./vendor- run:

name: Database Setup

command: |

vendor/bin/phinx migrate -e staging

This particular workflow was to automate deployment of our staging branch. The following lines from the top of the config file restrict CircleCI automation to GitHub check-ins to that branch only:

jobs:

build:

branches:

only:

- staging

We use Phinx for our database migrations. The last run command takes care of database updates:

- run:

name: Database Setup

command: |

vendor/bin/phinx migrate -e staging

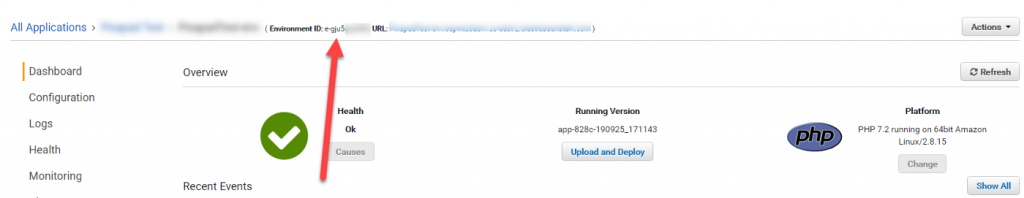

The value for the eb deploy command wasn’t immediately obvious to me. Or, more candidly, I over-complicated it.

The first time I ran the CircleCI workflow, I tried deploying to the actual Environment ID, which I copied from my Elastic Beanstalk dashboard:

Oops.

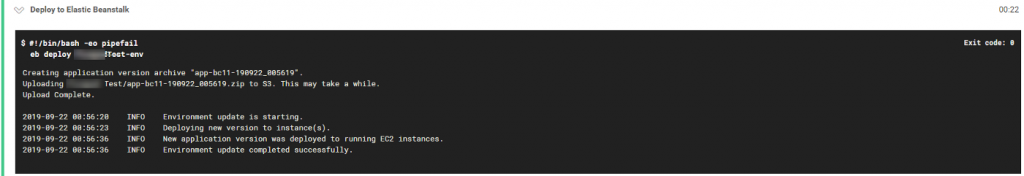

Next, I tried the actual environment name:

Much better!

Creating a Directory on the Server

Ironically, the last step was actually the hardest. Our requirements called for creating a folder outside of the application root. This was important because we wanted the contents of that folder to persist between deployments. The contents were dynamic, and thus weren’t stored in source control.

I spent quite a few hours trying various flavors of the mkdir command in my config.yml file. They always ran successfully, but I could never find the folder after SSHing into the server.

It wasn’t until a StackOverflow user asked a few pointed questions that I realized I was creating the folder in the container, instead of on the server.

It’s amazing how I sometimes make really simple mistakes!

In the end, the solution was simple.

As part of Simon’s tutorial, we had created a file named github-deploy.config and stored it in our .ebextensions source code directory. To that, we just added the last two lines:

commands:

01-command:

command: sudo aws s3 cp s3://REDACTED/REDACTED-github-deploy /root/.ssh

02-command:

command: sudo chmod 600 /root/.ssh/REDACTED-github-deploy

03-command:

command: sudo mkdir -m 0755 -p /var/my_new_folder

I hope this helps you with your PHP / Elastic Beanstalk continuous integration workflow. Automating deployments saves time and makes the entire development, testing, and release process much more pleasant!

Special thanks to Simon McClive from Gradient and FelicianoTech from CircleCI!